Day 0 and 1 of my time at MIT Reality Hack. That was insane, the energy amazing, the creative drive unreal, the problems challenging, the learning academic. I have not felt that for a very long time.

That was insane, the energy amazing, the creative drive unreal, the problems challenging, the learning academic. I have not felt that for a very long time. No problem at work has ever stimulated me that much, no vibe was ever that wavey, nothing has been that hard, and I have never felt that energy before.

Hope I can go back. I’ve found what I want.

I have a million ideas now, so many blog post starters in my Notes app, so many project ideas. So many racing thoughts.

I want to take you on my journey of Reality Hack ‘23, a bit shortened and expanded.

Day 0: Travel

Syracuse to Boston via Amtrak takes 9 hours. Next time I’ll just fly, hope one day we get high speed rail. After arriving to a hostel and seeing my bunk mates mess underneath me I checked into a hotel that night. To get to the hotel I took a Lyft and this starts a pattern in Boston of fascinating Lyft drivers:

Driver 1: I have never had someone talk to me Securities and Crypto at such length, and about TeleAviv. This man had learned the lesson of “not your keys, not your coins”. If you think of a crypto scam, he’d been affected by it (Mt. Gox, Celsius, to name a few). Went from Chemistry to a Finance major. Also told me all about ICOs (Initial Coin Offerings) via Telegram channels. Welcome to Boston.

Eventually went to sleep at 1 AM.

The end of day 0.

Day 1: First day of MIT Reality Hack 2023

Wake up: 6AM.

The hotel was a 30 minute walk to MIT Media Lab for check-in; cut through a park and walk the compact streets of Cambridge, love walkable cities. Cambridge is pretty walkable, on the MIT area of town there are even fully protected sidewalks with protected bike lanes. I think this might make some Central New York politicians faint at the inconvenience to cars or the idea of protecting cyclists/pedestrians (looking to move). While I’ve heard bad things about Boston drivers (I’m going to combine all of the surrounding areas under “Boston” for this :P ), they were generally nice, but that’s been true of most places I’ve been to so far.

I arrived at MIT Media Lab at 8AM for check in and received my badge. Time to mingle with the sponsors and network.

Picture of me with my badge.

Today besides check-in is workshop day and opening ceremony day and team formation day.

Arriving at the sponsor floor was when it became very real about where I was.

In this video we have a few of the sponsors: Vive, FCAT, Esri, XR Terra, Ultraleap, Dolby.io and right at the end is Looking Glass Factory. A full list of sponsors can be found here (scroll down).

General tip: have a notebook/doc when meeting new people, state their name, information about them - maybe physical description so you can not fumble their name when you meet them again.

ESRI: I met Nick from ESRI and he explained their new product ArcGIS Maps SDK for Unity which allows you to view 3D map data in Unity/Unreal. It looks good, it looks really good. growing up, playing COD (Call of Duty) I wished I could play my neighborhood, with this you could do that. Also, I’ve tried getting 3D GIS data before, and it’s hard. The textures are locked down and the only way to get building meshes is 3dbuildings.com or use RenderDoc and pull the values Google Earth. I’m looking forward to using this in the future.

FCAT: Did you know Fidelity has an R&D lab? I didn’t till then. Also I had never used a VR headset till right then. Immediately sold (bought a Quest 2 when I got back).

UltraLeap: Thank you to Chris for explaining to me about the OpenXR standard and how you all are extending it by adding onto the skeleton tracking. Very interesting stuff, hope I use it in the future. I don’t have a photo of this booth but here is one of their demo videos:

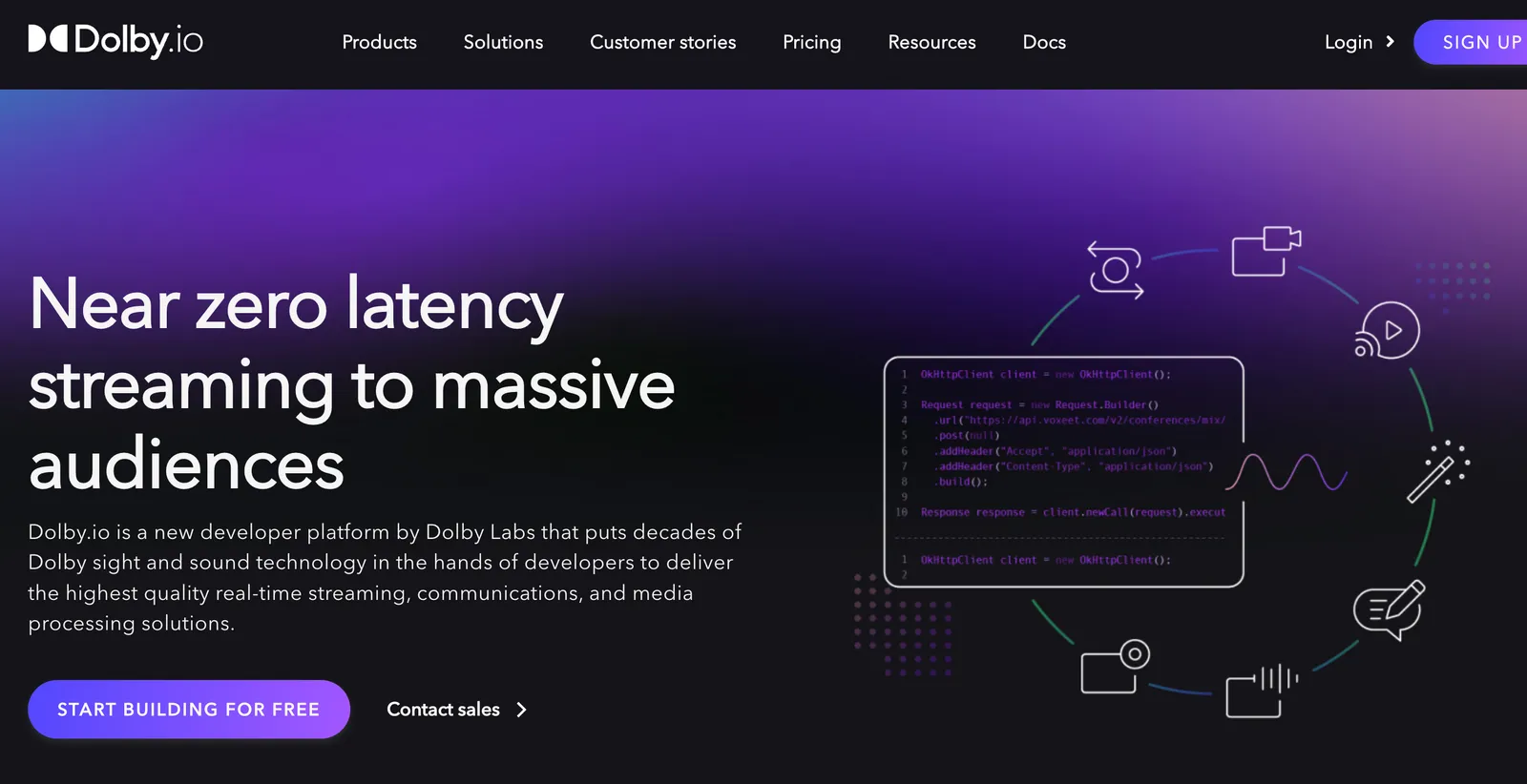

Dolby.io: You know how Mixer had the FTL protocol (reverse engineering blog post of it) Faster Than Light, and if you stream on YouTube or Twitch there is a big delay (learned tuning this delay for Control My Lights). What if there was a service that allowed for sub second streaming? Well there is now Dolby.io using WebRTC. Incredibly stoked for this.

Scroll down to “Workshop 7: Spatial Audio Overview” (or just Ctrl+f/CMD+f and go right there) to see this in action in Unity.

Lynx: This is very cool, a high end pass through VR / AR headset for way cheaper than a Microsoft HoloLens: https://www.lynx-r.com/products/lynx-r1-headset. Ran into them walking back my hotel at night. Was nice to see a friendly face at night. Why is Cambridge so dead silent at night? Was it because the colleges were out?

HaptX: Didn’t get to try, they look wild (in a good way). Heard they use micro-fluidics to control the pressure you feel, insane. Hope I can try it out someday.

Born: Nice guys, cool demo of their Forest of Resilience. Also, had great stickers!

Looking Glass Factory: video doesn’t portray these well, the 3D effect is real.

Met Gabriel (new person)- in line for something. It’s serendipitous how you meet people at Reality Hack.

Lunch happens - met more people.

Check out this clock in front of iHQ:

1pm - The workshops:

Note: These were the workshops: You’ll quickly notice cool stuff is all happening at the same time, wish it was spread over a few days but any one you picked was good

The first workshop: Looking Glass Factory led by Bryan Chris Brown.

These are light field displays, displays a different image depending on where you are in relation to the screen. The software creates a sprite sheet-like grid, called a “Quilt” and I guess the internal electronics do the rest. You can even attach it straight to Unity/Unreal. Check out their docs here.

2nd workshop: Cognitive3d workshop.

I decided to rest and stay and stayed at the same room for the Cognitive3d workshop. A tool that allows you to gather geospatial analytics in the VR environment, as well as do eye tracking analytics. I can see using this for iterating over an interaction to fine tune it, especially in game design.

3rd workshop: Intro to the hardware hack

aka “DIY Open Source Hardware” led by Bryan from Looking Glass/ Project Northstar and Lucas De Bonet of LucasVR (LucidVR).

Meeting Lucas was so cool after seeing him on Linus Tech Tips’ video.

Both talked at length of about community building at having an open source project that are challenging the industry. This got me pumped for it.

That’s Bryan and Project Northstar - checkout his light up shoes.

These are the LucidVR gloves:

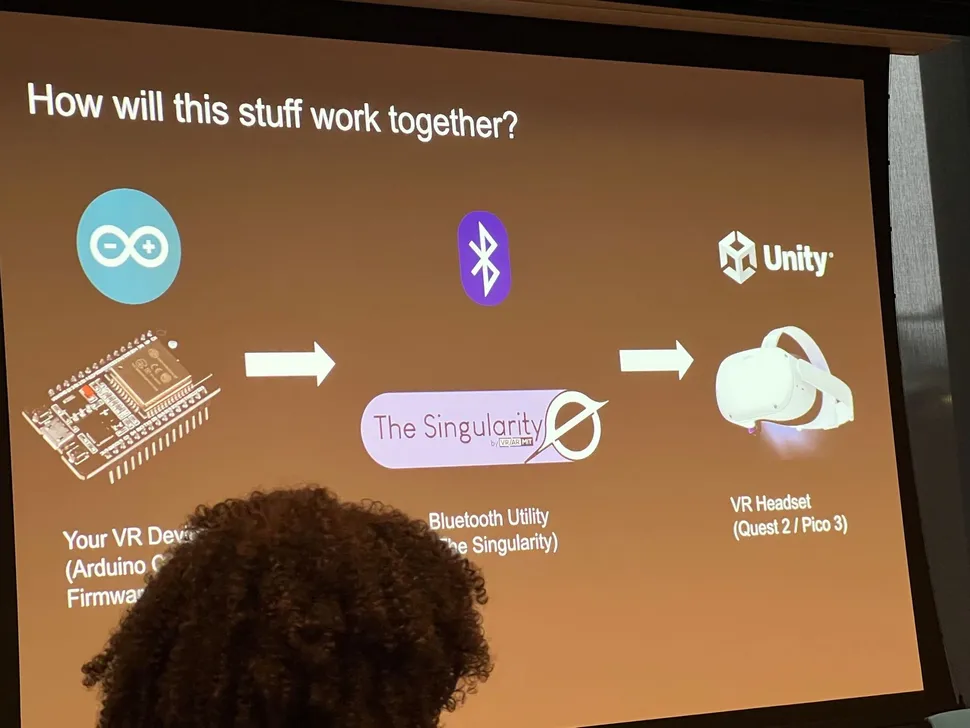

Workshop 4: Walking Through the Hardware Hack

This was basically a “what to expect”. How to scope, what you’ll doing, what you’ll get in terms of hardware. But the crown jewel of this talk was TheSingularity - “This is a software development kit for Unity that allows you to make microcontroller-based VR hardware communicate to your Unity project. Currently it supports communication via Bluetooth Serial between microcontrollers and Android-based VR headsets.” (the README.md). This was/is a very new library made by students over winter break. Everything about your projects will be Alpha.

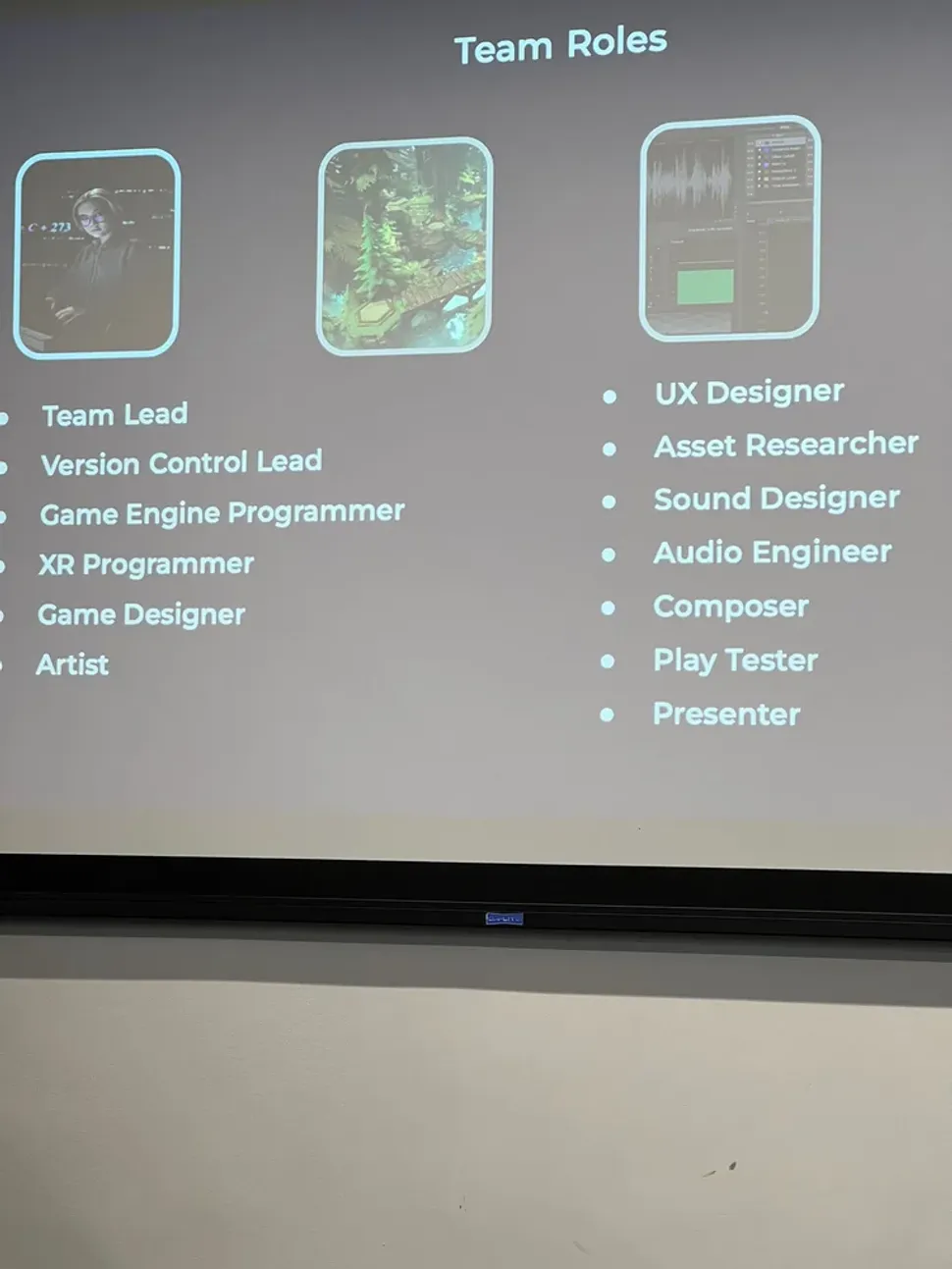

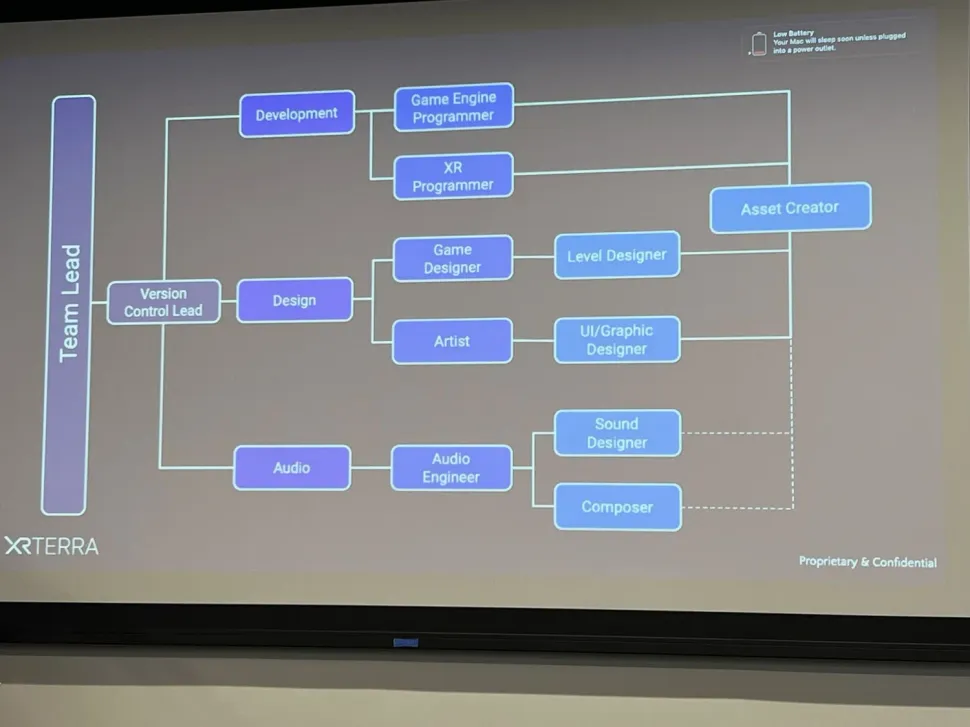

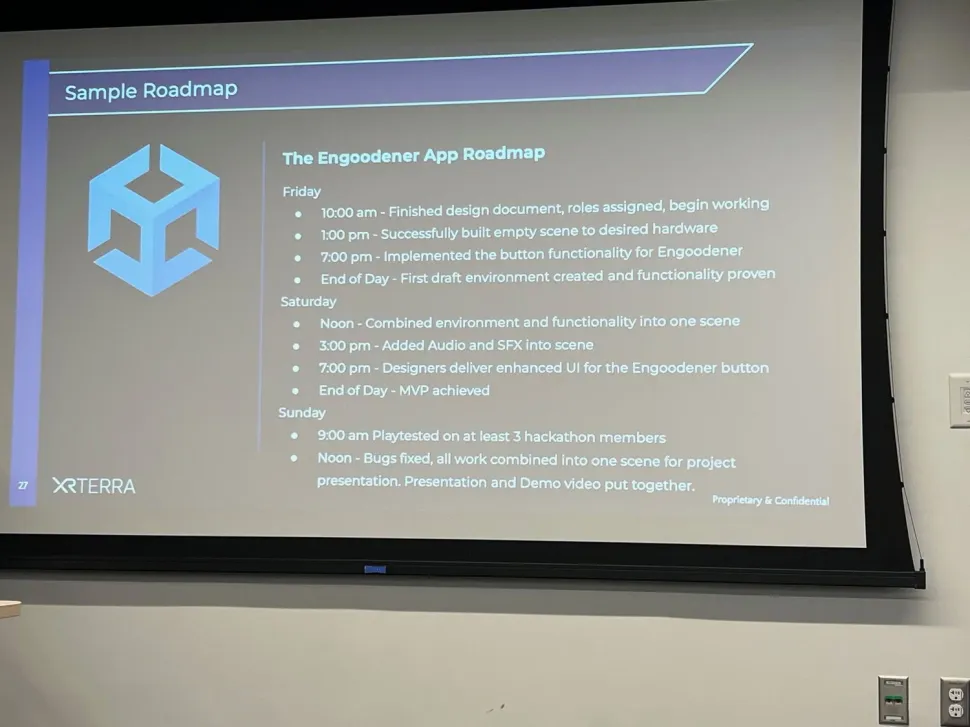

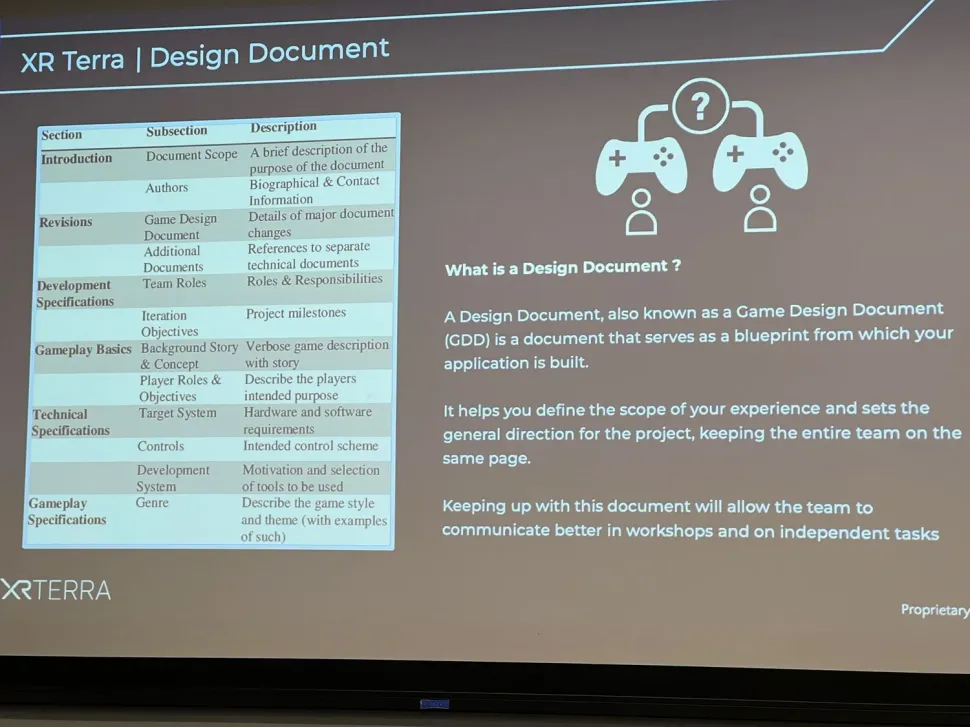

Workshop 5: Rapid Prototyping and Team Management by XR Terra.

Fantastic workshop on project management. Probably should have asked for the slides and studied them further. I think we did good project management but there were areas to improve on.

Workshop 6: Spatial Audio Advanced Techniques by Shirley Spikes of Magic Leap.

“Advanced techniques and ideas to think about when designing audio environments and interactions for AR and VR technology.” Met Shirley IRL for the first time and got a lesson in spatial audio techniques: like diegetic/non-diegetic sounds, how do you mix audio in Unity? how do you mix that audio to give the sense of a sound is facing away from you? what software can help give spatial audio (Steam Audio, Google Resonance Audio)?

Workshop 7: Spatial Audio Overview - Dolby.io by Dan Zeitman.

I went through in this using Dolby.io to stream and receive video in Unity. This was a hands on project. Dolby.io is pretty sweet, I could also see using this for a data transfer api, if you encode your data as RGB pixel values then unpack that data on the receiving end you could pump data through it, kinda like using RGBA values in shaders to accelerate your computations by storing your data in the GPU.

Also, this is where I met Yash because we have the same laptop skin and were also doing the hardware track.

Break: Dinner / Opening Ceremonies.

It’s about 7PM at this point. Dinner is where I met Logan and Lewis. Opening Ceremonies were pushed back to 7:30pm ish.

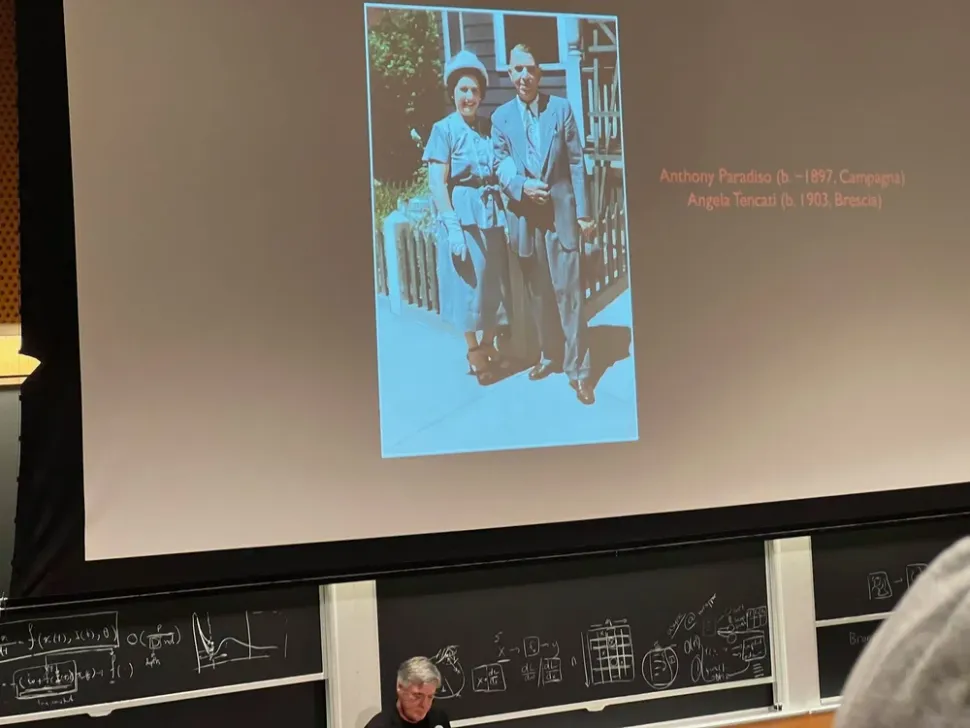

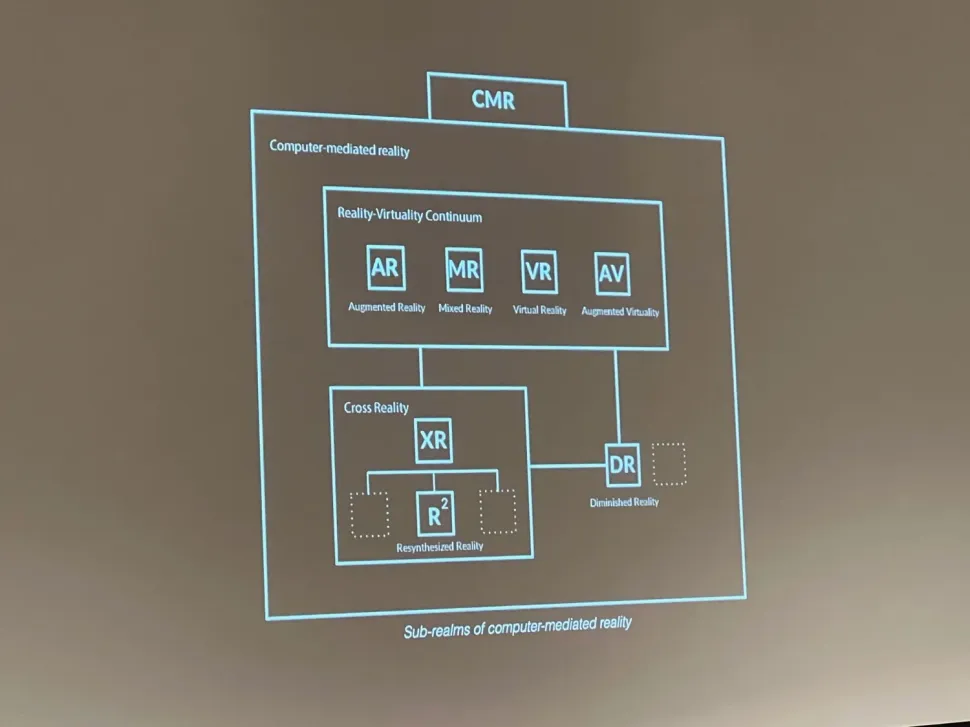

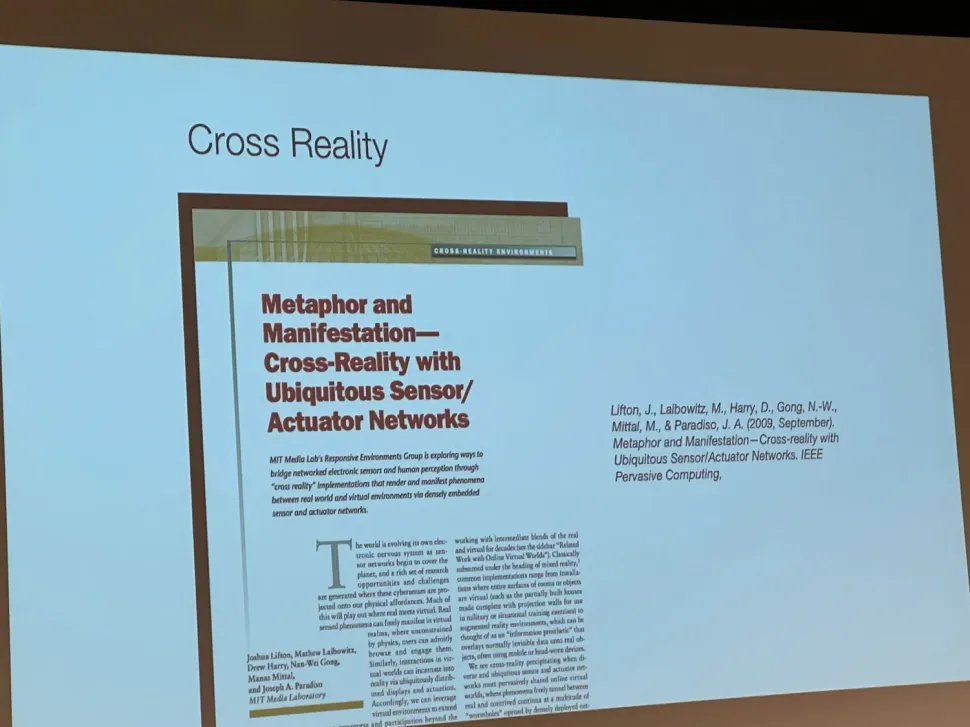

Dr. Joseph A. Paradiso (check out his synths [link1, link2]) of the Responsive Environments Lab talked about cross-reality and how our world has changed. Loved his ideas on cross-reality and putting into context how far technology has come. Similar to his grandparents (though no horse and buggy) my grandmother would take a wagon and walk to get ice for the “fridge” and would re-hydrate salted fish in the tub. She grew up poor in the 1920s and into the depression. She died a few years ago and to think about how the world changed in 90 years is a bit humbling.

Team Formation

Cross reality and reflections on innovations over time.

I would love to discuss with him “Cross Reality” and re-defining “extended reality” in a more human context with him. I have a bit of post written on it, it was awesome to hear someone else’s take on it and give me a new term to look into.

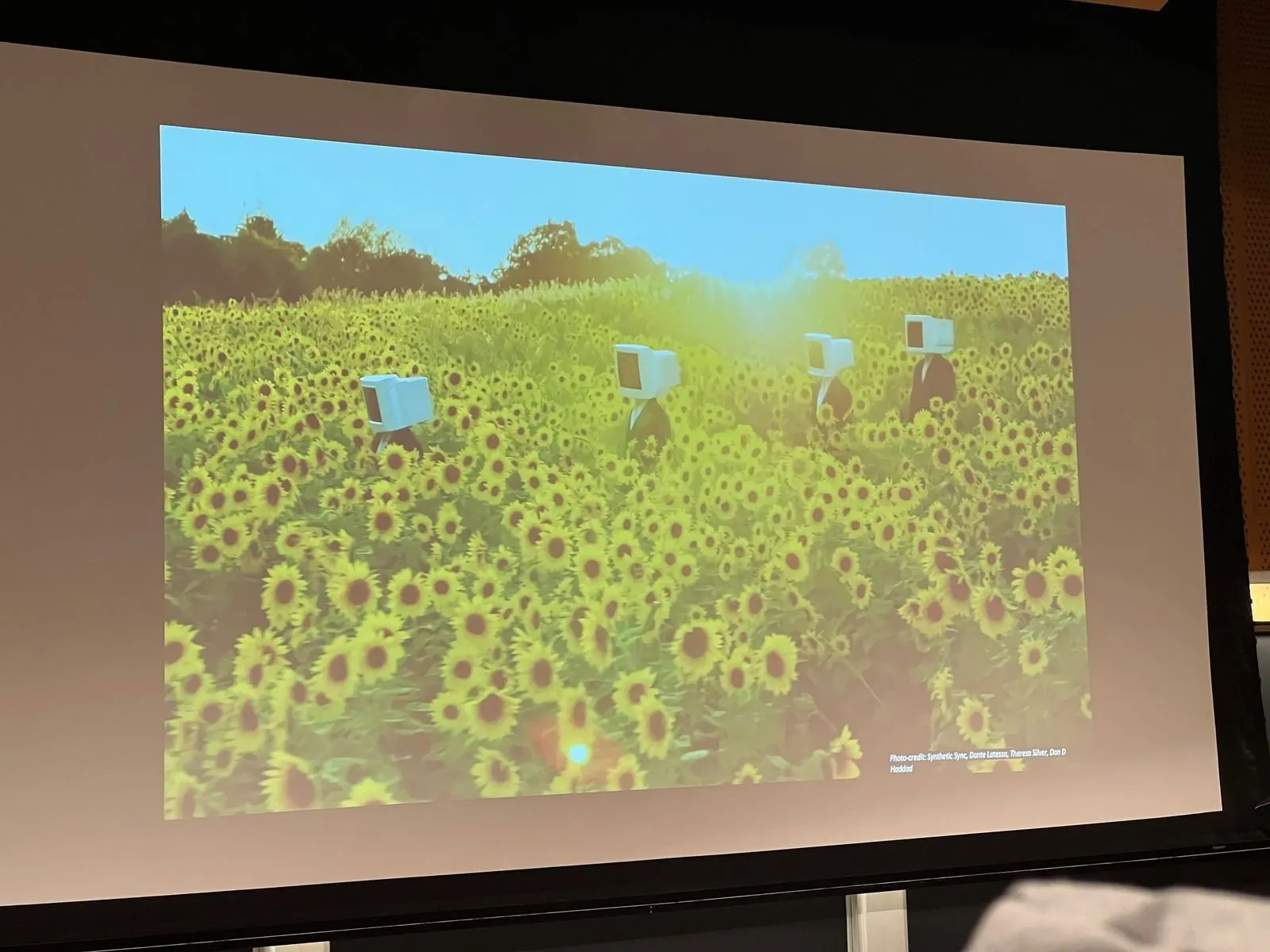

Then 2 graduate students from the (Ferrous S Ward and Don Derek Haddad) presented their work on VR and robotics, specifically in relation to moon exploration. As it would turn out, Don has an absolutely sick modular synth setup and played absolute bangers.

Sunflower field with people in suits. The people are wearing CRT monitors.

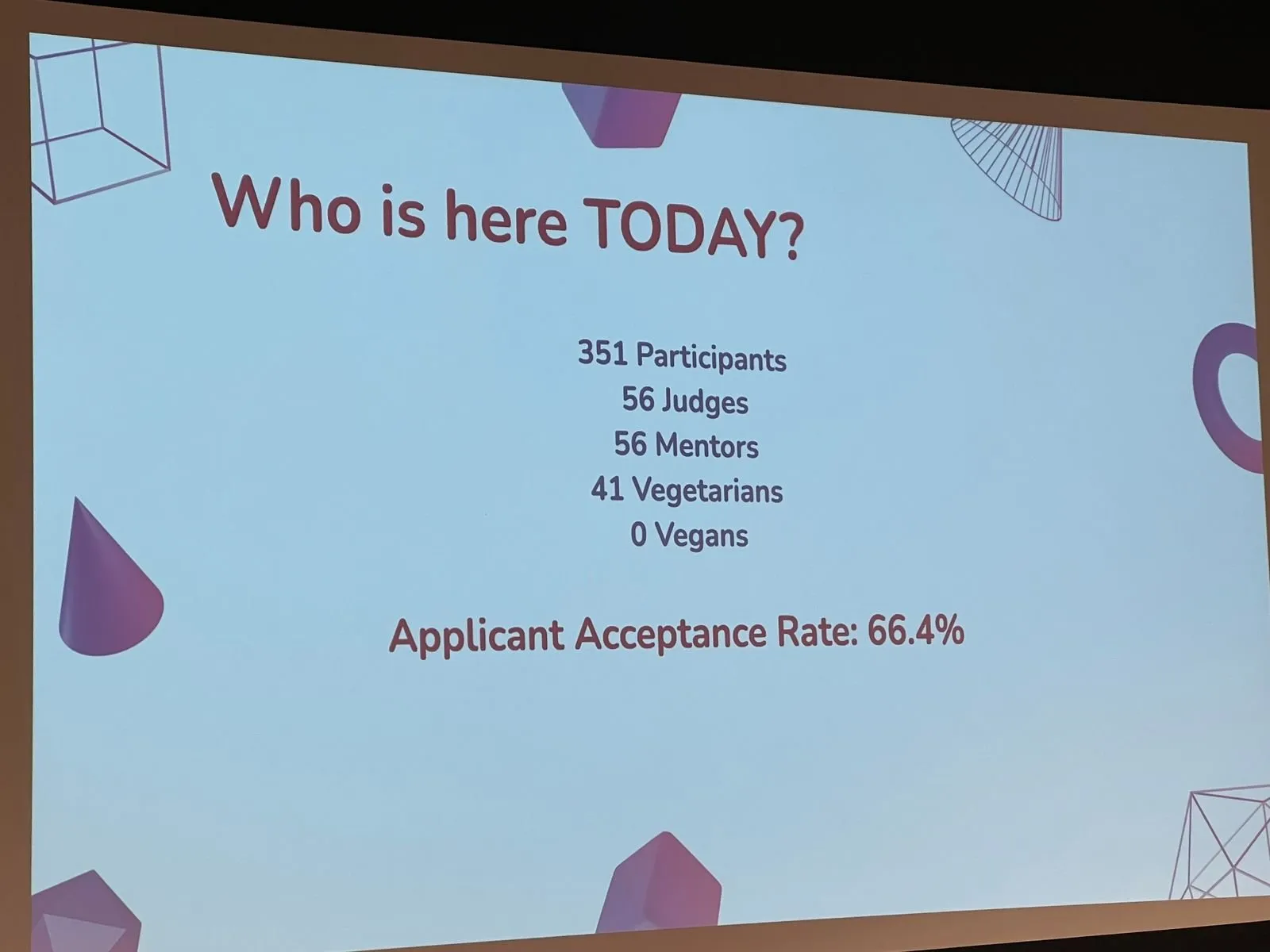

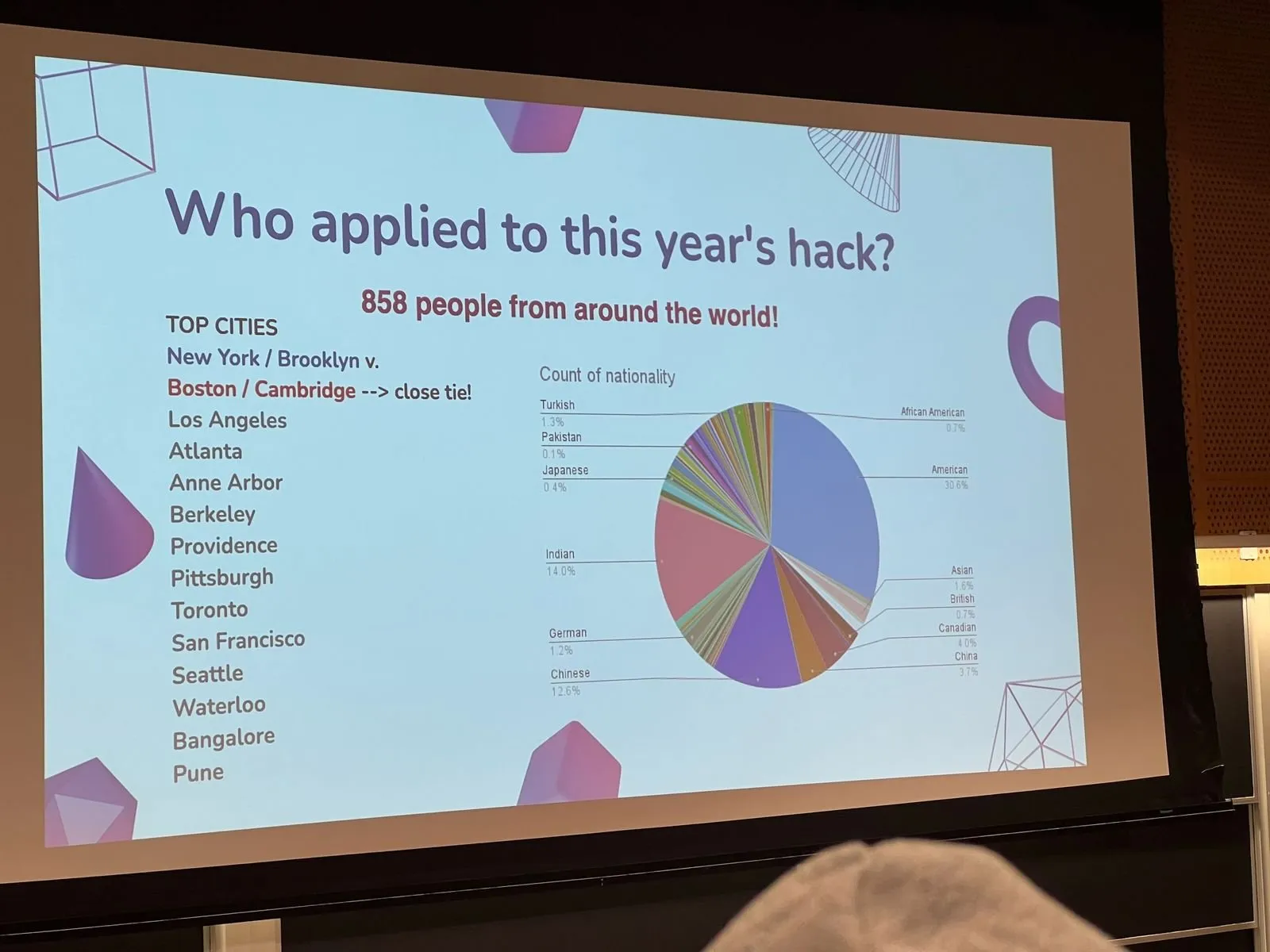

I know I said the sponsor part was when it was made real to me about where reality set in. Out of the 800ish people who applied 351 were accepted, and I was one of them :).

And there were people from all over the world:

Time to make teams:

The hardware track had amazing people in it, and there definitely was a common vibe (good vibe). I don’t know how to place it.

Team making was chaotic but good. Eventually once you have your team and you link up with people you have met that day and exchange discords it is time to go back to your hotel and get up early the next day. This is where I would meet Rui Jie, Jacob, Dana and Whitt.

.DZNhrkEv_1Vs2ty.webp)