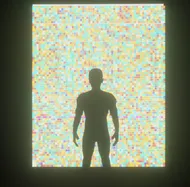

This past year I got a new phone, an iPhone 13 Pro specifically for the LiDAR sensor, and especially to use one app: Polycam. Augmented Reality is an area of computing that I have been interested in since learning about Google Glass and ZeroUI, but have never been able to create it till now.

Let’s first define Augmented Reality vs Virtual Reality:

- AR - overlays computed elements overtop your reality (camera view).

- VR - constructs a new digital world, like a video game.

With Polycam we can scan objects using the LiDAR sensor to gain texture and depth data of an object to eventually create a mesh. The LIDAR sensor creates a point cloud that Polycam manipulates (read more about the sensor data).

This app has completely changed how I look at my environment: from just passing by to taking the time to discover the little details.

Here are a couple of my scans:

Polycam:

This is a horse with a blue/red/white design by StayFreshDesign in front of the Syracuse MOST Museum.

This is a dinosaur sculpture I found on the side of the road by the MOST in Syracuse.

This is a pendant light on the outside of the State Tower building entrance (Syracuse, NY).

MIT Reality Hack 2023 Guitar we made:

After writing the original version of this blog, many months later LumaAI came out. It doesn’t use the LiDAR tech but photos to create a Neural Radiance Field (NeRF).

LumaAI:

This is a hand sculpture in Downtown Syracuse NY. Capturing the hand with LumaAI was very quick compared to the LiDAR process with Polycam.

This is an angel sculpture in the Syracuse School of Music:

This is a scene of the stairs in that same school notice how the details degrade on the sides.

Using both of these tools taught me what to use for what application. LumaAI is great for stationary object scanning while Polycam is fantastic for large scenes.

.DZNhrkEv_1Vs2ty.webp)