The team on Friday. (Left to right: Me (Edward), Jacob, Whitt (in the headset), Yash, Dana.)

This is a part of a series of blogs detailing my experience at MIT Reality Hack 2023. My team’s project can be found here, https://edwarddeaver.me/portfolio/mit-reality-hack-2023/. This is a continuation of the Day 0 and 1 blog post. If you haven’t read that yet, I would encourage you to do so: https://edwarddeaver.me/blog/mit-reality-hack-2023-blog-day-1/.

At the moment I’m writing it has been about 1 week 2 weeks since the event and my memory is a little hazy. Lack of sleep and high stress (good stress in this case) take a bit more of a toll than they did when I was doing hackathons in high school, (shout out Hack Upstate). I’m using chats and photo dates as a guide for myself. This one will be a bit more technical and diving deep into certain topics.

Friday, January 13th - Day 2

Day 2 started bright and early at… 9 AM. We were all collectively dead from the night before.

We got breakfast and started project managing what we were going to do, and breaking up tasks.

This was 10AM :)

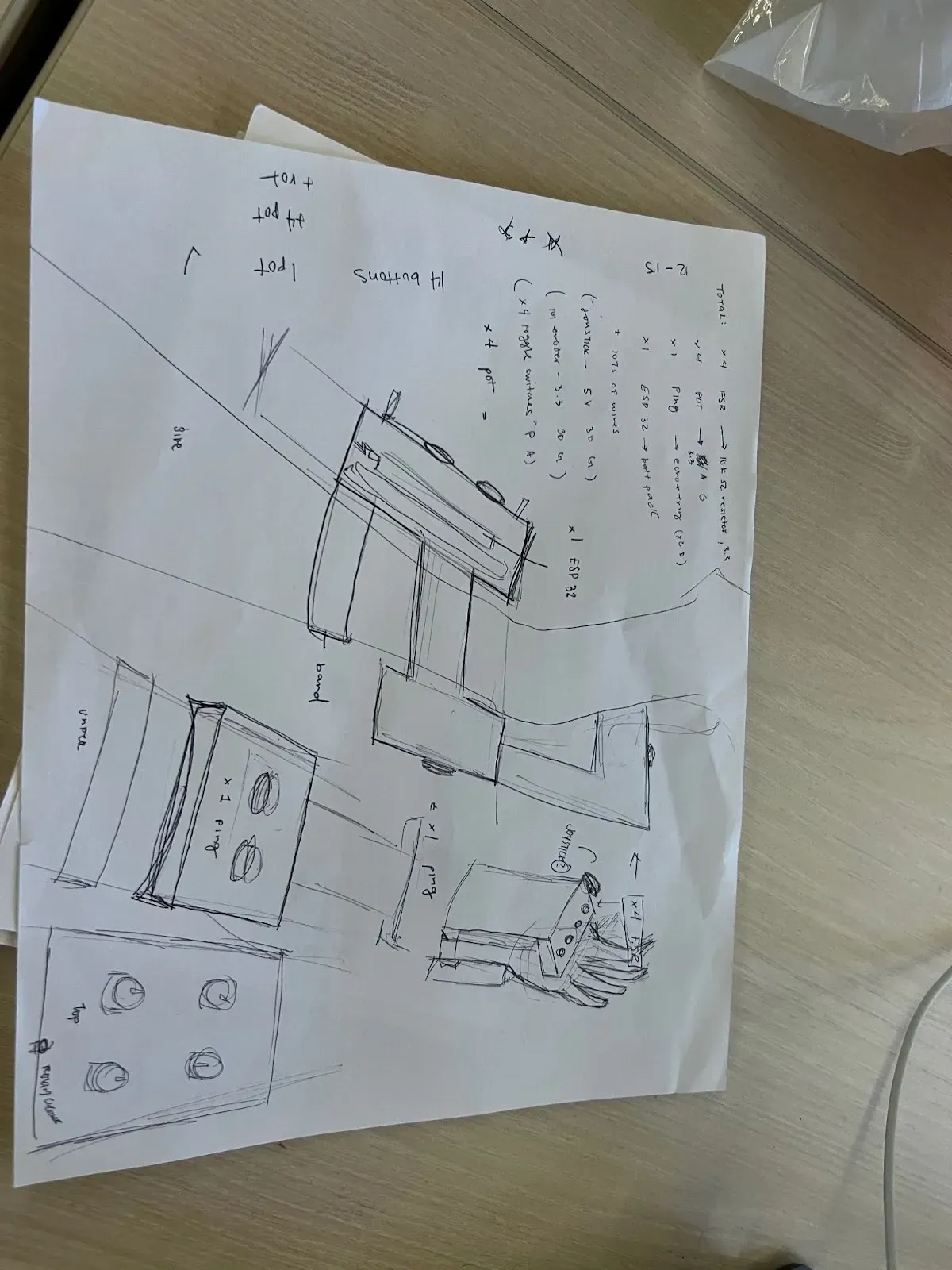

Initially, we discussed creating a hand-based controller similar to Imogen Heap’s, see this video by Reverb to learn more about them:

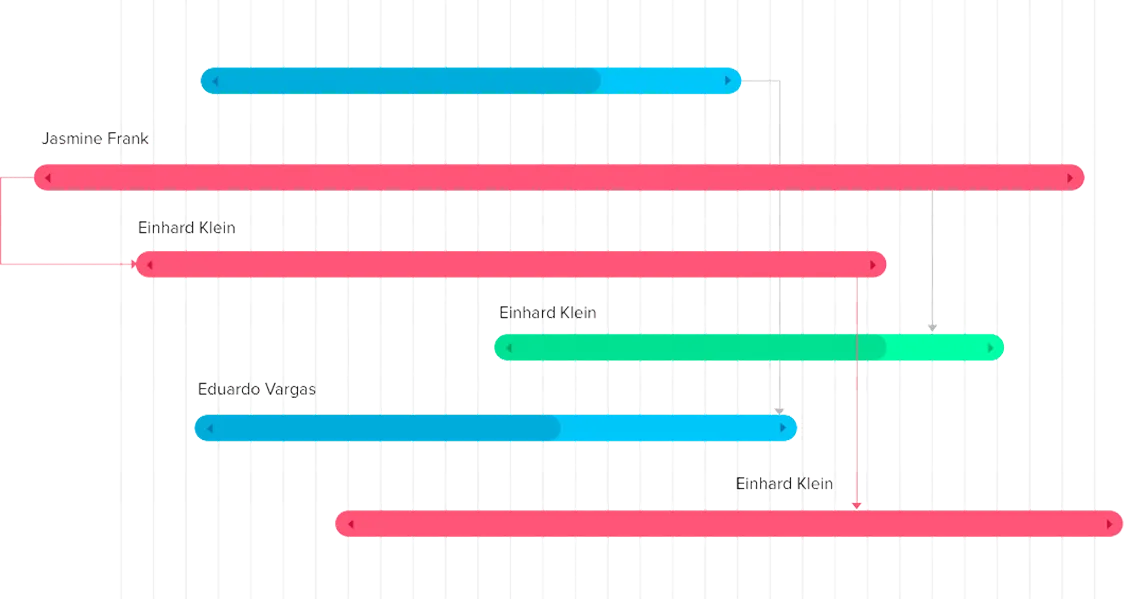

To get started we split tasks up so we could all work asynchronously and come back together with our parts. Whitt would go for the Singularity integration because he had the most Unity experience (this would have us meeting Lucas and Aubrey - both mentors). Dana and I started testing our sensors and getting the code working for them. Yash was working on the mockup for the controller, and Jacob was working on Bluetooth on the ESP32 side. Dividing and conquering was interesting as a lot of us had overlapping skill sets.

Here is a video discussing the idea:

Discussing the idea.

Mock up of the tactile glove:

I started with getting our ultrasonic distance sensor working with the ESP32, this took longer than predicted. luckily we had the ones with timing crystals on them, never get the ones without them your data will be very messy (learned on my Bachelors capstone project). For next time, and for planning a project with extreme time constraints, I would give X number of minutes per task, and if they don’t get completed in that time frame cut them. This would work well for non-core parts of a project like which sensors you get working because your physical design can be adapted to the hardware. This would keep a high degree of stress at the beginning but could relieve some at the end because your vision gets automatically scoped.

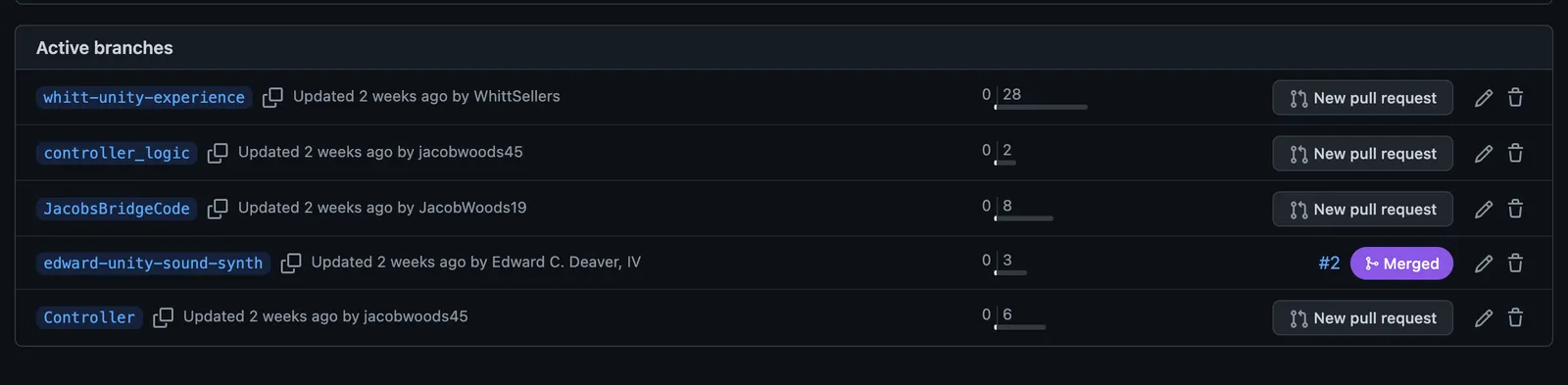

Early on this day I set up our GitHub repository and used branching to maintain our separate code bases. This normally works well, except that there were game engine files in it. The process for adding game files is very manual and requires an extensive .gitignore that Whitt provided. For game design, Plastic SCM or Perforce Version Control Systems are typically used. None of us had a license for those, so Git it was.

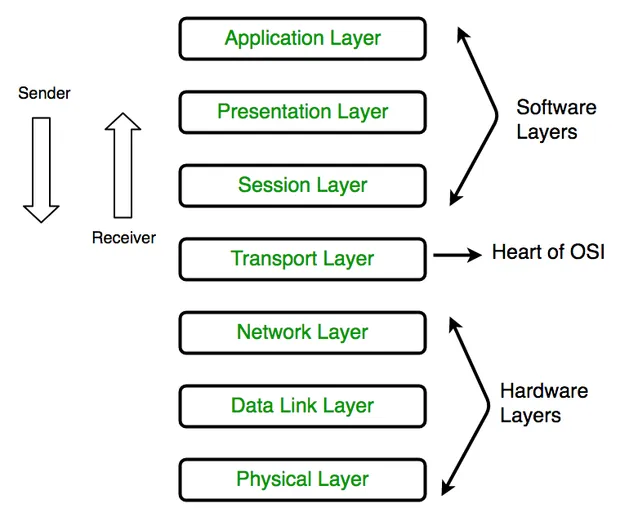

Software-defined vs firmware-defined data:

Before diving into this let’s define those terms. Software in this instance will be any program running on our computers or Quest 2 device, generally running at the application layer (OSI model layer 7). Firmware is the code running on the ESP32 allowing it to interface with the hardware (OSI model layer 1 - Physical Layer).

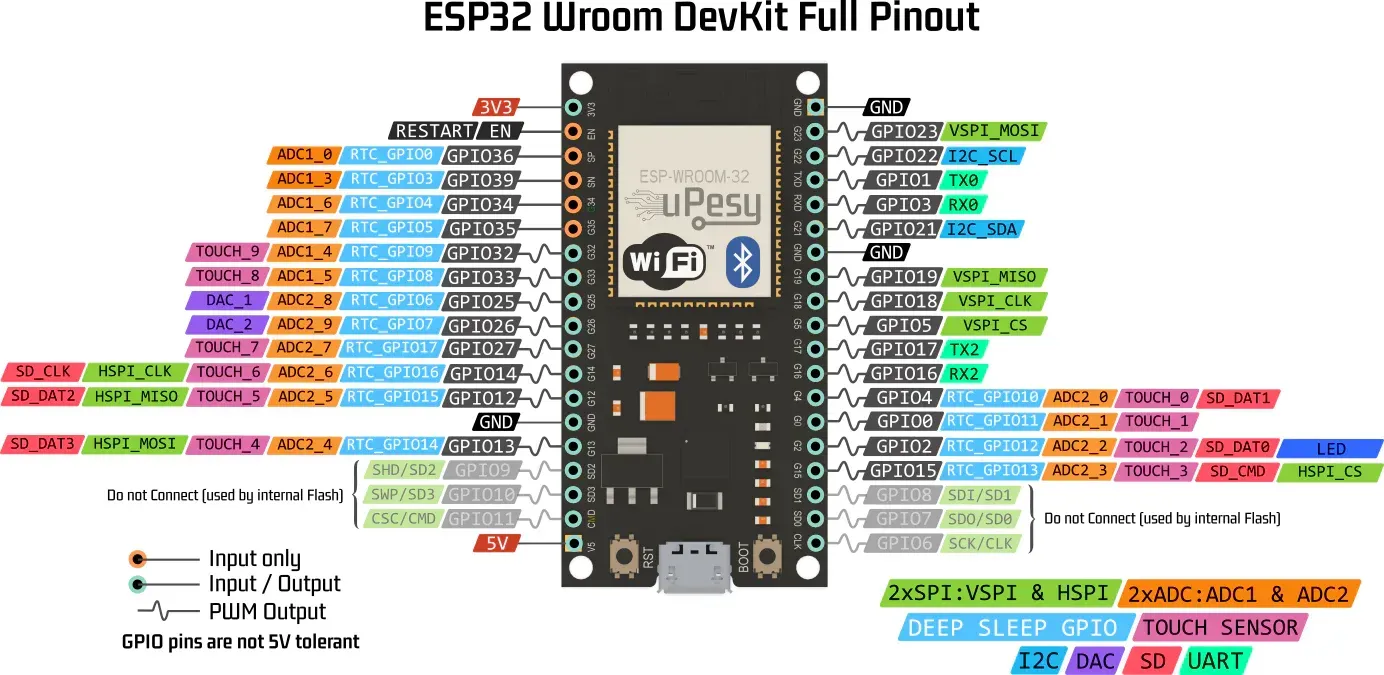

When getting sensors to work on the ESP32 or any microcontroller for that matter you’ll have digital vs analog inputs. The ESP32 has 18 Analog to Digital Converter pins with a 12-bit resolution, so a minimum of 0 to a maximum of 4096 (if you were using an Arduino Uno that max would be 1024 because it is an 8-bit device). So this means our potentiometer values would be in that 0-4096 range. Our ultrasonic would not be, it works by counting digital pulses. Our buttons would also not be as they operate as either high or low, 1 or 0. You have a choice in dealing with this data getting it raw and offloading it, doing some clean up and sending it, or producing finalized analysis on it ( lots if else statements to send discrete commands). These approaches all have trade offs: speed vs clean data, clean data vs flexibility of interpretation of that data, speed vs system responsiveness (remember you only have 2 cores - even with an RTOS you’ll have trade offs).

Initially, we discussed the actual values that the ESP32 would send out to address this. You can do processing on the device itself but then you eventually can run into constraints of processing speed and we are working with a system that needs to be responsive as possible so the hardware should be dumb and just offload data quickly. I pushed for going for a software-defined route for our data instead of interpreting it on the ESP32 to give us more flexibility. This can be compared to MIDI controllers controlling a software synth. The controller is just sending its raw values over predefined protocol standard and then you are left to deal with it in software.

Let’s pop out of hardware land and jump to Bluetooth land in Unity:

Bluetooth:

This is where we would come to meet Aubrey and Lucas (I’m trying to link new people in the story as we go along but I might forget). I wasn’t very involved with getting Singularity up and running, I just knew it took a long time. According to Whitt, “So with the singularity it was supposed to be pretty simple and the delay was from not being able to see why it wasn’t working. It was a bit of blind testing but we ended getting it to work by ignoring their instructions on making a custom script with their library and just modified a script they supplied on a prefab that came with the package”

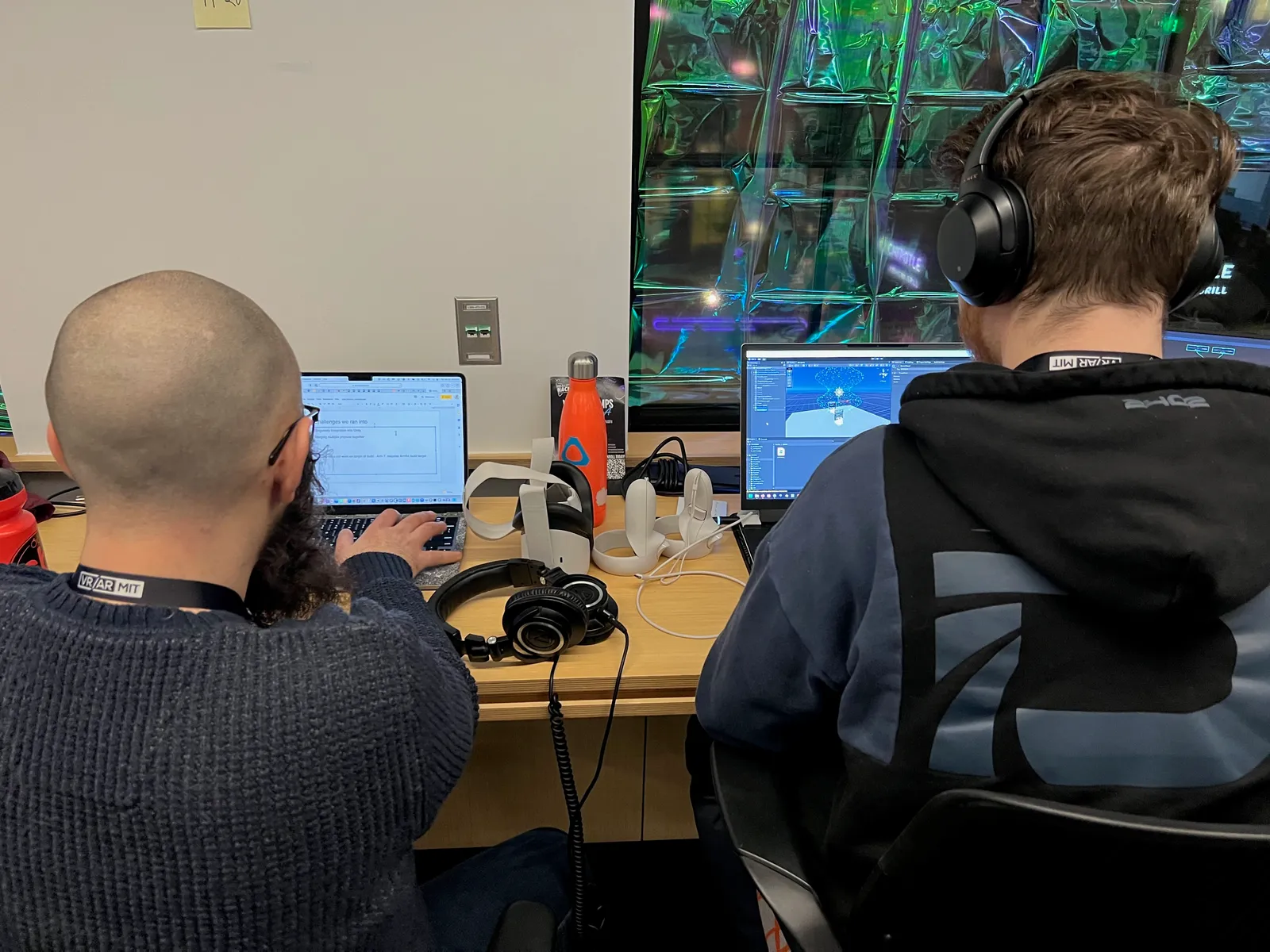

An ESP32 was sending Bluetooth data, and Whitt and Lucas were debugging it. 7 pm Friday night.

Jacob and I (briefly) worked on finding a data format, and settled on Arduino JSON. A library that allows you to easily create JSON formatted data. I was able to point out a nested for loop would cause a poor performance, which I was pretty proud I was able to remember Big O.

A test ESP32 would be kept around to send to send data to the Quest. Also, Bluetooth interference would start to become an issue.

Sound Synthesis (Csound):

How do you make noise in Unity? I didn’t know so I asked “Can Unity generate audio or would a tool like PureData/MaxMSP/VCV Rack be better?” to the Reality Hack Discord. Thank you to Russell, Antonia, Aubrey and Yiqi for helping me out. Big ups to Aubrey for suggesting Csound and Csound for Unity (here are some sketches of it).

Csound is so interesting. It’s a real time synthesis library that was made at MIT Media Lab in the 1980s by Barry Vercoe. The history of Csound is fascinating and how it flowed into other tools like PureData and MaxMSP. If you have a few minutes dive into the Wikipedia page for it.

This is what a Theremin instrument is in Csound:

<Cabbage> bounds(0, 0, 0, 0)form caption("Theremin") size(700, 300), guiMode("queue"), pluginId("thm1")rslider bounds( 0, 40, 80, 80), valueTextBox(1), textBox(1), text("Att."), channel("Attack"), range(0, 5, 0.15)rslider bounds(100, 40, 80, 80), valueTextBox(1), textBox(1), text("Gain"), channel("Gain"), range(0, 1, 0.65)rslider bounds(200, 40, 80, 80), valueTextBox(1), textBox(1), text("Glide"), channel("Glide"), range(0, 1, 0.15)rslider bounds(300, 40, 80, 80), valueTextBox(1), textBox(1), text("Lfo Freq"), channel("Lfo"), range(0, 100, 7)rslider bounds(300, 40, 80, 80), valueTextBox(1), textBox(1), text("Lfo Amp"), channel("LfoAmp"), range(0, 100, 8.5)rslider bounds(400, 40, 80, 80), valueTextBox(1), textBox(1), text("Filter Freq"), channel("FiltFreq"), range(100, 5000, 1000)rslider bounds(500, 40, 80, 80), valueTextBox(1), textBox(1), text("Filter Res"), channel("FiltRes"), range(0, 0.95, .066)rslider bounds(600, 40, 80, 80), valueTextBox(1), textBox(1), text("Table"), channel("Table"), range(0, 3.99, 1.5)</Cabbage><CsoundSynthesizer><CsOptions>-n -d</CsOptions><CsInstruments>sr = 48000ksmps = 64nchnls = 20dbfs = 1

chn_k "Frequency", 1chn_k "Amplitude", 1

;courtesy Iain McCurdyopcode lineto2,k,kk kinput,ktime xin ktrig changed kinput,ktime ; reset trigger if ktrig==1 then ; if new note has been received or if portamento time has been changed... reinit RESTART endif RESTART: ; restart 'linseg' envelope if i(ktime)==0 then ; 'linseg' fails if duration is zero... koutput = i(kinput) ; ...in which case output simply equals input else koutput linseg i(koutput),i(ktime),i(kinput) ; linseg envelope from old value to new value endif rireturn xout koutputendop

instr 1

kat chnget "Attack"kport chnget "Glide"kfreq chnget "Frequency"kamp chnget "Amplitude"klfo chnget "Lfo"klfoamp chnget "LfoAmp"kfiltf chnget "FiltFreq"kfiltres chnget "FiltRes"kgain chnget "Gain"ktable chnget "Table"kPortTime linseg 0, 0.001, 1kEnvTime linseg 0, 0.001, 1

kcps lineto2 kfreq, kPortTime * kportkenv lineto2 kamp, kEnvTime * katkff lineto2 kfiltf, 0.01kfr lineto2 kfiltres, 0.01

alfo lfo klfoamp, klfo, 0ftmorf ktable, 99, 100aosc oscili kenv, kcps + alfo, 100aout moogladder aosc, kff, kfr

; left right outputouts aout*kgain, aout*kgainendin

</CsInstruments><CsScore>;causes Csound to run for about 7000 years...f0 3600f1 0 16384 10 1 ; Sinef2 0 16384 10 1 0.5 0.3 0.25 0.2 0.167 0.14 0.125 .111 ; Sawtoothf3 0 16384 10 1 0 0.3 0 0.2 0 0.14 0 .111 ; Squaref4 0 16384 10 1 1 1 1 0.7 0.5 0.3 0.1 ; Pulsef5 0 16384 10 1 0.3 0.05 0.1 0.01 ; Customf99 0 5 -2 1 2 3 4 5 ; the table that contains the numbers of tables used by ftmorff100 0 16384 10 1 ; the table that will be written by ftmorfi1 0 3600</CsScore></CsoundSynthesizer>If you are interested in this stuff but also want to go lower check out the Electro Smith Daisy platform. From talking to their community on Discord a lot of the Csound ideas are implemented into the core library.

This is a demo of me controlling the synth in C#:

Video of theremin in Unity.

Code from above:

using System.Collections;using System.Collections.Generic;using UnityEngine;

namespace Csound.TableMorph.Theremin{

[RequireComponent(typeof(CsoundUnity))]public class theraminSynth : MonoBehaviour { public float frequencyField = 60.0F; public float amplitudeField = 1.0F; public float gainField = 1.0F; public float lfoField = 100F; public float tableField = 3.99F;

[SerializeField] Vector2 _freqRange = new Vector2(700, 800); CsoundUnity _csound;

IEnumerator Start() { _csound = GetComponent<CsoundUnity>(); while (!_csound.IsInitialized) yield return null;

_csound.SetChannel("Frequency", frequencyField); _csound.SetChannel("Amplitude", amplitudeField); _csound.SetChannel("Gain", gainField); _csound.SetChannel("Lfo", lfoField); _csound.SetChannel("Table", tableField);

}

void Update() { if (!_csound.IsInitialized) return;

_csound.SetChannel("Frequency", frequencyField); _csound.SetChannel("Amplitude", amplitudeField); _csound.SetChannel("Gain", gainField); _csound.SetChannel("Lfo", lfoField); _csound.SetChannel("Table", tableField);

}

// When pressed in JSON call this and play (button 0 pressed) // playNote(1.0) // Perfect fifth

// amplitudeField should be 0 - 1f) public void setAmplitude(float Amplitude){ _csound.SetChannel("Amplitude", amplitudeField); } public void setFrequency(float Frequency){ _csound.SetChannel("Frequency", Frequency); } // position - button position on fret board 0 at top

public void playNote(float position){ _csound.SetChannel("Frequency", frequencyField * (position * 1.5)); } public void changeLFO(float lfoVariable){ _csound.SetChannel("Lfo", lfoVariable); } }}People:

During the day we met Jim (mentor) who loved our idea and gave us math lessons on music theory. It was awesome! Stoked off that. He talked to us about circuit bending, guess what he brought on Saturday? The Guitar Hero controller also a Bop It and other toys.

Also talked to the other teams around us like Hong and others (these are the parts that have blurred together).

Night time:

There was a nice social.

Walking back the hotel I saw this sticker that said Lorem Ipsum (trust me, that’s what this blurry photo says). This is the nerdiest sticker I’ve ever seen.

Saturday, January 14th - Day 3

Wow, that was just Friday, I got tired even writing that.

Good morning…On the walk through the park I saw this. I don’t know what sport this is but I’ve never seen one of these in a park before. It kinda looks like the sport featured in The Road to El Dorado (2000) pok-ta-pok. Someone mentioned in the Discord it might be Quidditch … maybe ¯\_(ツ)_/¯ . Even in the Cambridge PDF of parks it’s not mentioned. Is it like a very space efficient basketball hoop? Why is there a key on the ground behind it?

Check out this demolition:

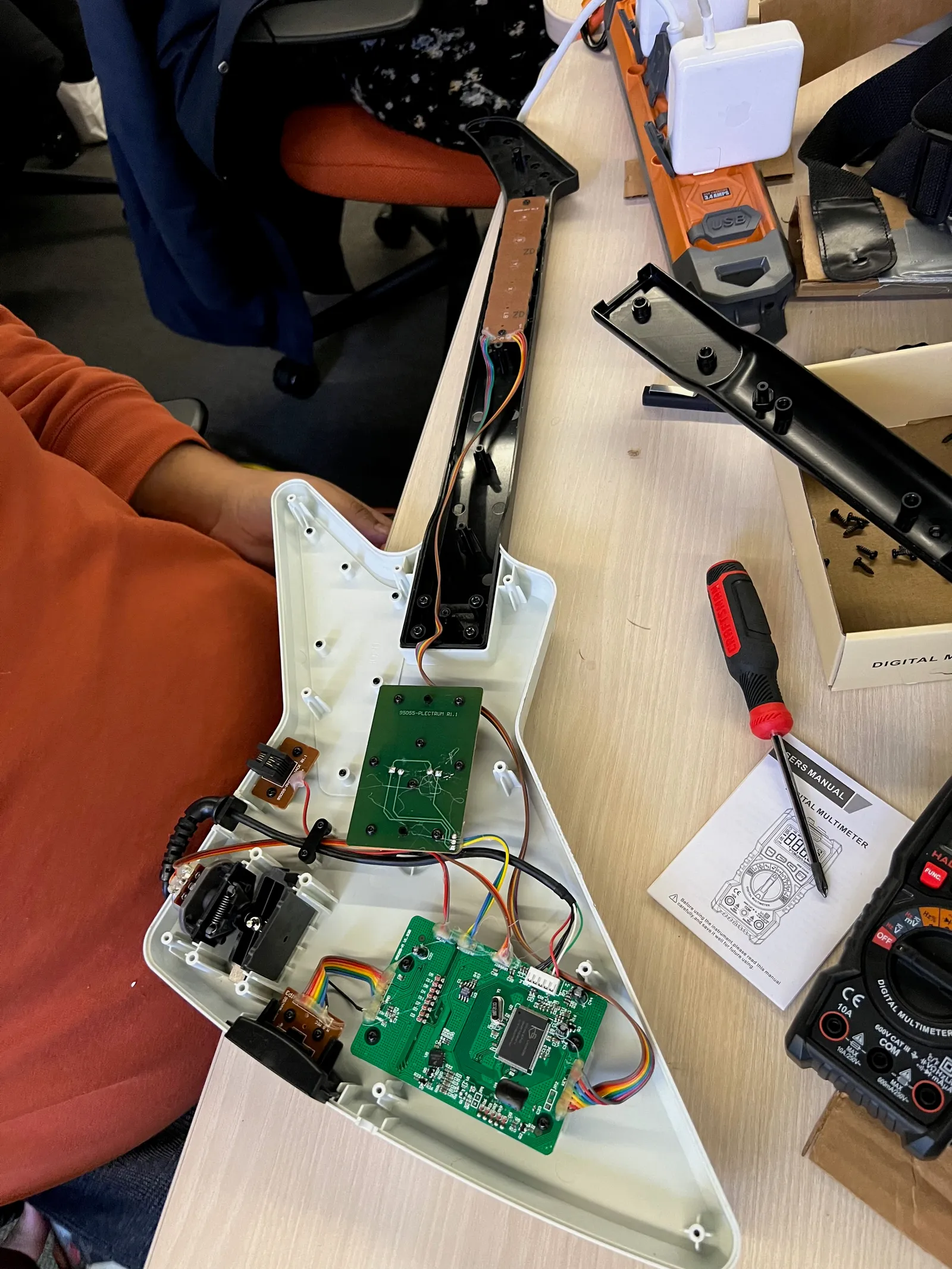

Today Jim came with the toys and in his Santa bag of gifts was a Guitar Hero controller. Upon seeing it we scrapped the glove idea and went into re-using the the controller, and participated in the Circular Economy.

Internals of a guitar hero controller. Much simpler than modern electronics.

Dana continued on working on sensors, Jacob worked on getting Unity parsing the ESP32 content, Whitt worked on the VFX graph for particles, and I worked on Csound more.

At some point we went for a walk:

You can see Boston across the river, MIT, MIT and then me.

Thank you to Aubrey for the MIT history: this signature in lights, “The End of Signature, Agnieszka Kurant used artificial intelligence to create two different collective signatures realized as monumental light sculptures adorning the facades of two new buildings in Kendall Square” (learn more):

The afternoon turned into integration hell, trying to merge my project into Whitt’s, and Jacob’s into Whitt’s. Also, Yash and Dana had the task of switching from hand glove hardware and sensors to repurposing the guitar.

Csound note: If you don’t hear audio in the headset, make sure you are compiling for ARM64/ARM7 not just ARM7, that was an hour of panic wondering if Csound was compatible.

I want to pause here and say without our mentors and friends we could not have completed this project: Thank you to Jim Sussino (Mentor), Riley Simone, Greg (Mentor, XR Terra), Aubrey Simonson (Mentor), Lucas (Mentor) and Chris Smoak. Seriously, without their help we couldn’t have done it.

This is a short video showing Dana and Jacob talking about JSON in Unity:

This is a short video showing Dana and Jacob talking about JSON over Bluetooth in Unity.

Integrating my code with Whitt’s:

Saturday, January 14th - Day 4:

T-12 hours till judging. Feeling like a train on fire (thanks to stable diffusion for making that).

Wore the MIT Reality Hack shirt for the final day / judging.

Today was a race against the clock.

Whitt would eventually hardcode the device name into it to bypass the UI breaking.

When my part was done, I started work on our DevPost and compiling video for the video. Dana wrote the voice over, and I recorded it and edited it. That was fun to crank out a video in a hour.

It working:

Final thoughts: my team was simply amazing.

The next post will be the last in this series talking about the judging process and the public expo (lots of photos for both).

.DZNhrkEv_1Vs2ty.webp)